Live streams usually last several hours with many viewers joining in the middle. Viewers who join in the middle often want to understand what has happened in the stream. However, catching up with the earlier parts is challenging because it is difficult to know which parts are important in the long, unedited stream while also keeping up with the ongoing stream. We present CatchLive, a system that provides a real-time summary of ongoing live streams by utilizing both the stream content and user interaction data. CatchLive provides viewers with an overview of the stream along with summaries of highlight moments with multiple levels of detail in a readable format. Results from deployments of three streams with 67 viewers show that CatchLive helps viewers grasp the overview of the stream, identify important moments, and stay engaged. Our findings provide insights into designing summarizations of live streams reflecting their characteristics.

CatchLive provides real-time summarization of live streams to help viewers who join in the middle catch up on content they missed.

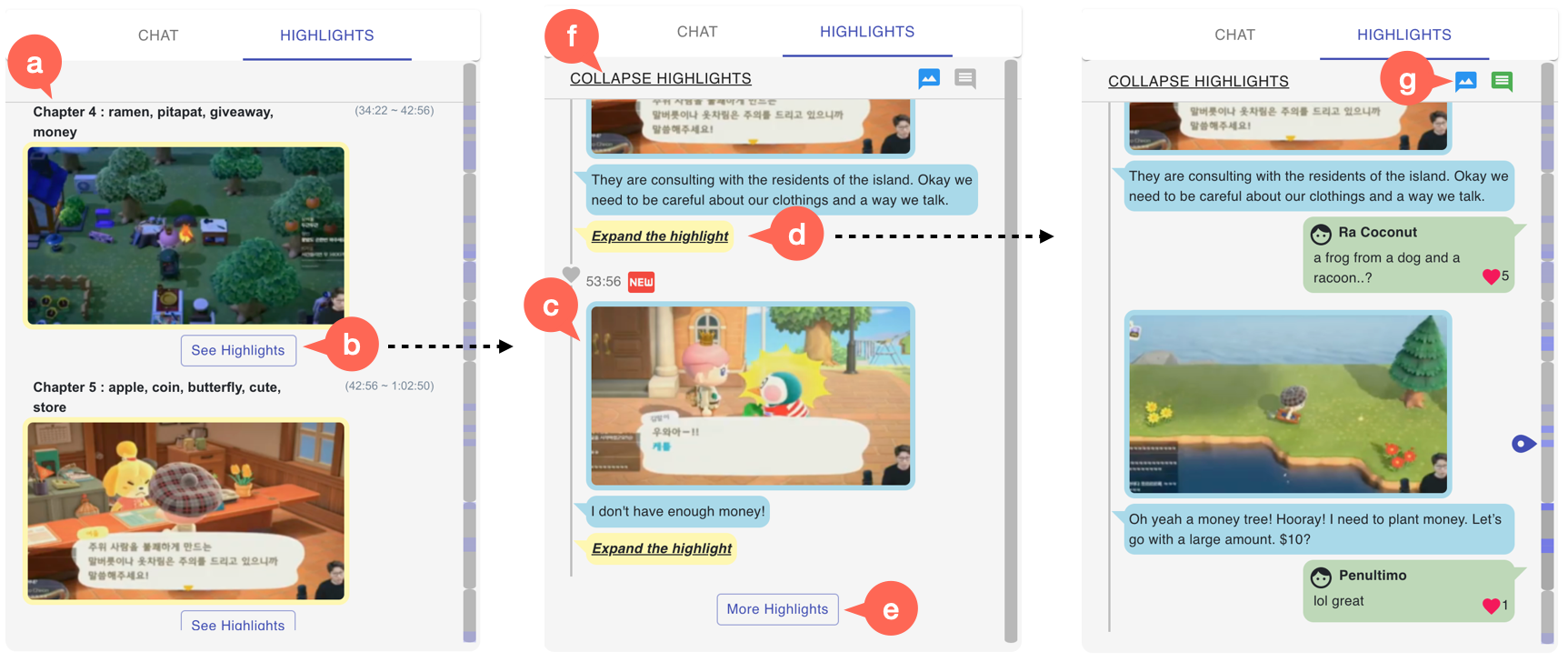

It presents (a) an overall structure of the stream by segmenting the live stream into high-level sections. (b) Hovering over a certain section shows brief information about the section. For each section, it provides (d) a summary of highlight moments, the moments which are (c) color-coded in the timeline. The highlights are accessible on-demand, such that viewers can see more or less detail depending on their needs. We present the highlights in a readable chat format so that users can skim them with minimal interruption to the current stream.

CatchLive provides the summary of live streams in a format of the Timeline and Highlights. The timeline is shown below the video and the highlights are provided in the Highlights tab.

The timeline information is also (a) displayed in a vertical view in the Highlights tab, with a representative screenshot and keywords for each section. Once a user is interested in a certain section, (b) they can see highlights of the section by clicking on the See Highlights button. Once a user clicks to see the highlights of a section, (c) the top two highlight moments are revealed with a representative snapshot and a sentence of the transcript. Users can either choose to see (d) more details about a particular highlight or (e) other highlights from that section. (f) Collapse Highlights brings the user back to the initial view (left). Once a user clicks to see more details about a particular highlight, (right) the rest of the snapshots and transcripts are shown to users. Users can also see other viewers’ chat messages of the moment by (g) clicking on the chat icon.

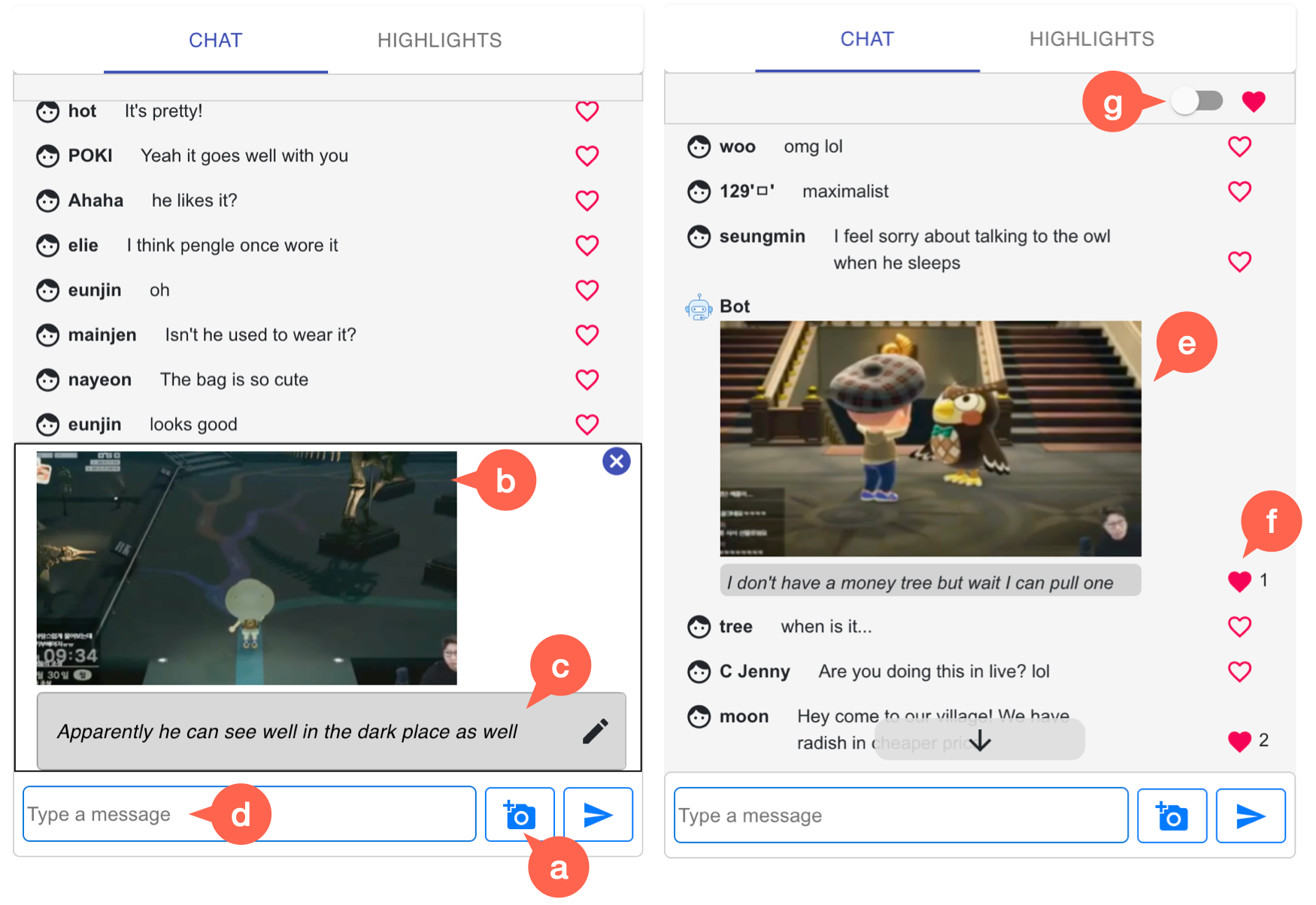

CatchLive leverages user interaction data to provide a summary of live streams. In addition to conventional chat messaging, users can (e) take snapshots of the stream to share interesting or important moments of the stream, and (f) “like” others’ messages.

We develop two core algorithms for CatchLive: (1) a real-time, online segmentation algorithm that partitions the stream into meaningful sections as the stream progresses, and (2) a highlights detection algorithm that extracts important moments from each section.

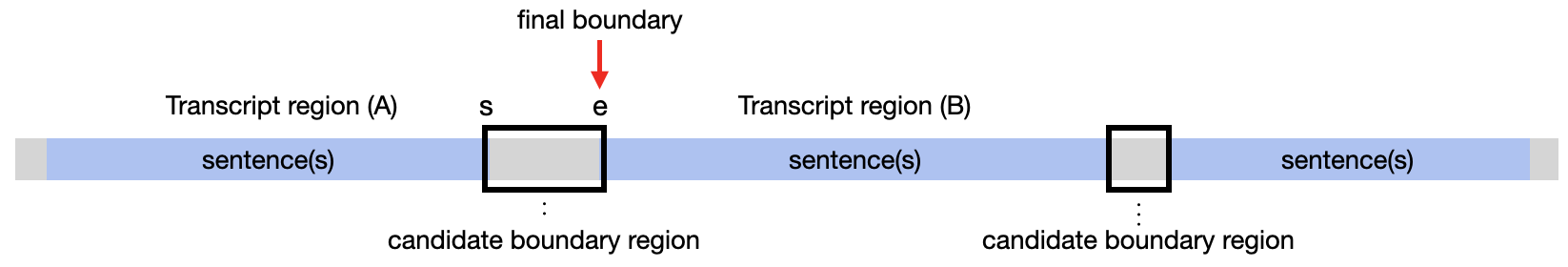

Our online segmentation algorithm first identifies candidate boundary regions, which are intervals between the end of a transcription region and the start of the next transcription region. For each candidate boundary region, we calculate a score with the following five factors:

The endpoint of a boundary with the highest score among all candidate boundary regions is identified as the next boundary of a section. The process iterates once the current portion (i.e., from the last identified boundary to the current point) exceeds a certain length.

To detect highlights, we divide a section into one-minute intervals and calculate a score for each interval with the following three factors:

We then normalize the score considering the number of viewers at each interval to make sure highlights are distributed more evenly. After scoring each interval, we determine the peak using a peak detection algorithm.

The table below shows example timeline information of a cooking stream resulted from the online segmentation algorithm. Participants mentioned that the timeline helped them grasp the overview of the stream. They mentioned that Highlights helped them identify important moments, understand more about the stream, and fill the void in live streams. The timeline and Highlights allowed them to catch up with less interruption compared to rewinding, although more information is needed to fully understand the previous context.

| Section | 1 | 2 | 3 |

|---|---|---|---|

| Snapshot |  |

|

|

| Keywords | Mic, Hello, Today, Shanghai | Ingredients, Shanghai Pasta, Easy, Chili oil | Noodle, Pasta, Ingredients, Look |

| Time | 01:04-17:23 | 17:23-24:08 | 24:08-36:07 |

| *Description | Greeting | Ingredients introduction | Ingredients preparation |

| Section | 4 | 5 | 6 |

| Snapshot |  |

|

|

| Keywords | Wine, Water, Put, Later | Pre-cooking, In advance, Pasta, Made it | Curious, Looks good, Made it, Pasta |

| Time | 36:07-46:30 | 46:30-1:05:30 | 1:05:30-1:13:45 |

| *Description | Cooking on the pan | Cooking while talking about the concept of pre-cooking | Tasting |

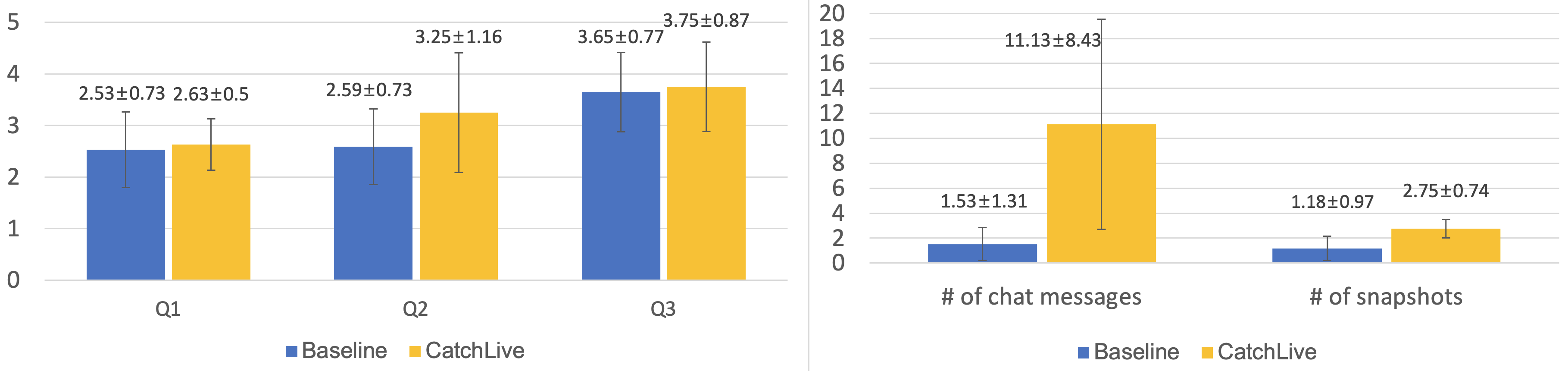

With a game-playing stream, we could see that participants with CatchLive were more engaged with the stream than the baseline group. There was no significant difference in their level of understanding.

Lastly, with three different stream genres, we could identify that the perceived usefulness of the system differs across stream genres. The table below describes how each characteristic of streams affected users’ experiences and how they should be reflected in designing real-time summarizations of live streams.

| Characteristics | Findings | Design Implications | |

|---|---|---|---|

| Content Type | Procedural | The timeline gave step information. | - Accurate label of each step - A full coverage of all steps |

| Unstructured | The keywords in the timeline were helpful in checking previous topics. | - Concrete keywords that distinguish a section from others | |

| Content Format | Visual | Snapshots gave a quick overview of highlights. | - Representative snapshots - Short clips of a highlight |

| Audial | Relying on transcripts to catch up required much time and effort. | - Accurate transcription - Text summarization techniques |

|

| Stream Pace | Slow | The summary features were not much of a distraction. | - More levels of detail of highlights - Short clips of a highlight |

| Fast | The summary features could be distracting. | - Shorter highlight moments - Combining multiple sources into one |

Coming Soon

@inproceedings{yang2022catchlive,

author = {Yang, Saelyne and Yim, Jisu and Kim, Juho and Shin, Hijung Valentina},

title = {CatchLive: Real-Time Summarization of Live Streams with Stream Content and Interaction Data},

year = {2022},

isbn = {9781450391573},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3491102.3517461},

doi = {10.1145/3491102.3517461},

abstract = { Live streams usually last several hours with many viewers joining in the middle. Viewers who join in the middle often want to understand what has happened in the stream. However, catching up with the earlier parts is challenging because it is difficult to know which parts are important in the long, unedited stream while also keeping up with the ongoing stream. We present CatchLive, a system that provides a real-time summary of ongoing live streams by utilizing both the stream content and user interaction data. CatchLive provides viewers with an overview of the stream along with summaries of highlight moments with multiple levels of detail in a readable format. Results from deployments of three streams with 67 viewers show that CatchLive helps viewers grasp the overview of the stream, identify important moments, and stay engaged. Our findings provide insights into designing summarizations of live streams reflecting their characteristics. },

booktitle = {CHI Conference on Human Factors in Computing Systems},

articleno = {500},

numpages = {20},

keywords = {video summarization, live summarization, user interaction data, live streaming},

location = {New Orleans, LA, USA},

series = {CHI '22}

}